Python KL Divergence is essential to measure the similarity or dissimilarity between probability distributions. One popular method for quantifying the difference between two probability distributions is Kullback-Leibler (KL) divergence. KL divergence provides a way to compare two probability distributions by measuring how one distribution differs from another.

KL divergence is often used in machine learning, information theory, and statistics. It has applications in various domains, including natural language processing, image processing, and recommendation systems. Understanding KL divergence can be valuable in model evaluation, feature selection, and generative modeling tasks.

Python offers many libraries and functions that facilitate calculating and utilizing KL divergence. These libraries, such as NumPy, SciPy, and scikit-learn, provide efficient algorithms and tools for complex computations and statistical analysis. With Python’s ease of use and extensive library ecosystem, implementing KL divergence and integrating it into data analysis pipelines becomes straightforward.

Contents

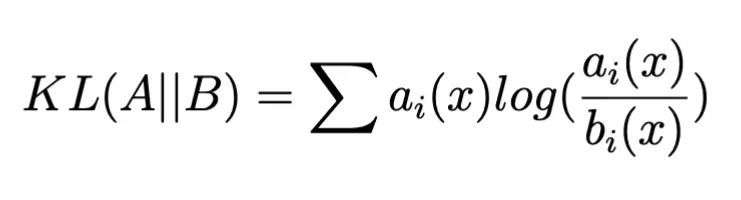

The formula for KL Divergence

KL divergence between two probability distributions, A and B, is

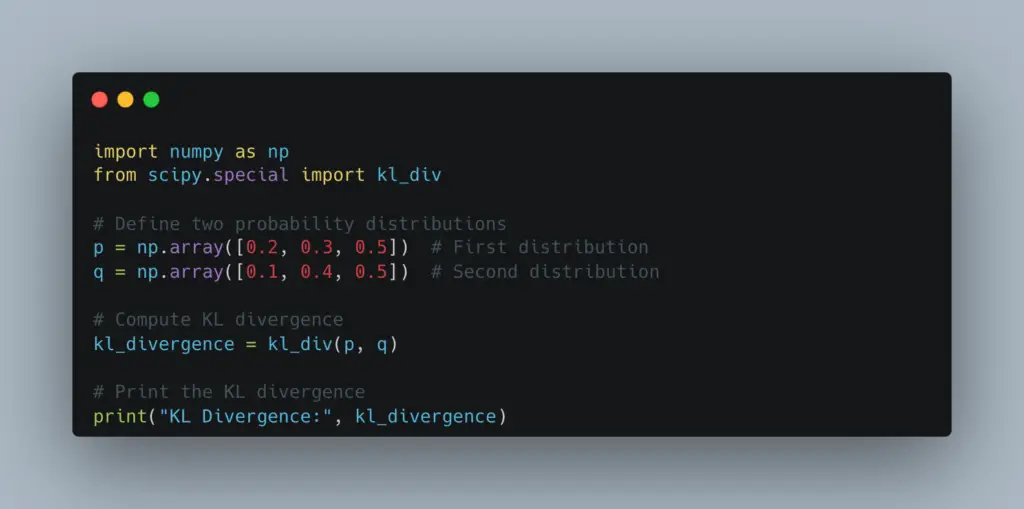

One can very easily write a function in Python to compute KL divergence or can use kl_div() function from Scipy. An example implementation of the formula above,

import numpy as np

def kl_divergence(a, b):

return np.sum(np.where(a != 0, a * np.log(a / b), 0))or

from scipy.special import kl_div

kl_divergence = kl_div(a, b)A simple Python KL divergence example

Let’s consider two simple probability distributions represented as arrays in Python, A = [0.2, 0.3, 0.5] and B = [0.1, 0.4, 0.5]. In Python KL divergence for these two distributions can be computed as,

KL divergence for Gaussian/Normal distributions

A Gaussian distribution, also known as a normal distribution, is a continuous, symmetric, bell-shaped probability distribution. It is one of the most widely used probability distributions in statistics and probability theory.

Let’s explore how we can tackle it in Python.

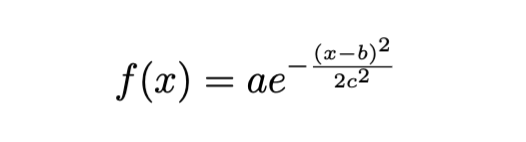

Gaussian distribution

The shape of a Gaussian distribution is parameterized by its coefficient a, standard deviation c (also known as RMS width), and location of the peak b, The probability density function (PDF) of a Gaussian distribution is given by the following equation,

The Gaussian distribution has several important properties:

- It is symmetric, meaning the distribution is the same on both sides of the mean.

- The mean, median, and mode of a Gaussian distribution are all equal and located at the center of the distribution.

- The distribution is completely defined by its mean and standard deviation.

- The curve of a Gaussian distribution is asymptotic, meaning it approaches but never touches the x-axis.

A function for Gaussian distribution can easily be implemented in Python using the equation above as,

import numpy as np

def gaussian(x, a, b, c):

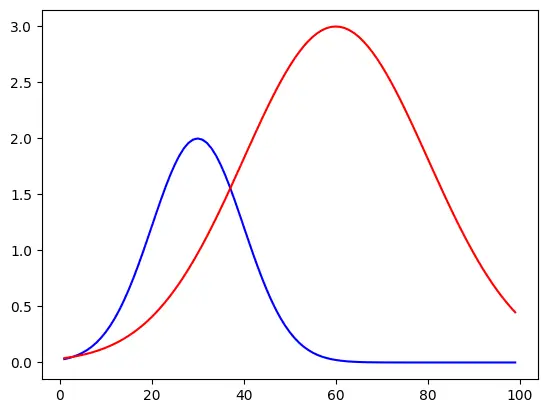

return a*np.exp(-(x-b)**2/ (2*c**2))Let’s plot two Gaussian distributions using this function, one with coefficient a=2, centered at b = 30 and standard deviation=10 and other with coefficient a=3, centered at b = 60 and standard deviation=20

from scipy.special import kl_div

x = np.arange(1,100)

A = gaussian(x,2,30,10)

B = gaussian(x,3,60,20)

plt.plot(x, A, c ="blue")

plt.plot(x, B, c="red")Output:

The blue and red curves are distributions A and B, respectively.

Python KL divergence between these two Gaussian distributions can easily be computed using the function in Scipy or you can use the function implemented using the KL divergence formula,

from scipy.special import kl_div

kl_divergence = kl_div(A, B)

print("KL divergence between Gaussian distribution A and B is ", kl_divergence)Or

import numpy as np

def kl_div(a, b):

return np.sum(np.where(a != 0, a * np.log(a / b), 0))

kl_divergence = kl_div(A, B)

print("KL divergence between Gaussian distribution A and B is ", kl_divergence)Conclusion

We now have learned what KL divergence is, and its mathematical equation. We saw a Python KL divergence implementation. An example of how to compute and plot Gaussian distributions. And we learned how to compute KL divergence between two Gaussian distributions.

References

To learn more about Python KL distribution also follow,

1. TowardsDataScience

2. Satology

3. Scipy documentation

Follow us at pythonclear to learn more about solutions to general errors one may encounter while programming in Python.